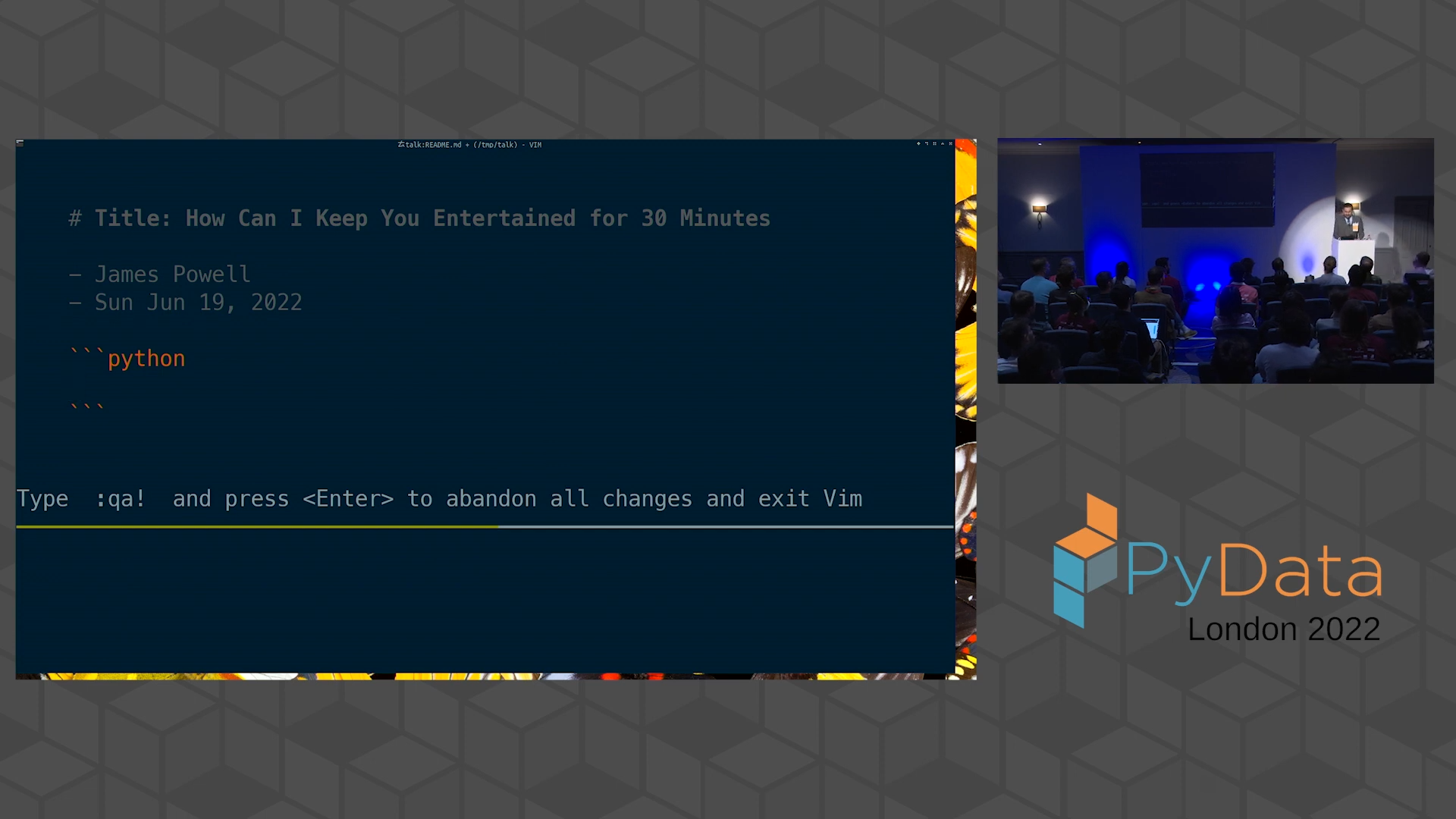

source: James Powell - Whatever I Can do to Entertain You in 30 Minutes | PyData London 2022

i was using this extension Language Reactor to export transcript into Excel and convert it to json. here is data in short:

{

"5s": "okay let me get started i'll give you the",

"7s": "premise the premise is the issues are not av related the issues are that my",

"12s": "laptop where i was up until 4am last night writing this talk",

"16s": "did not boot at all and as a consequence of that my notes that i spent quite a",

"21s": "bit of time putting together are not available to you but i am still on the",

"24s": "hook to keep you entertained for 30 minutes so we're going to see what i can",

"...": "...",

"25:55": "that makes it possible for us to write code that",

"25:58": "put some structuring around the core computation now with that said i hope i",

"26:03": "was able to entertain you i think we're just at the time that we have",

"26:07": "thank you everybody i'm james powell hopefully i'll give the real talk",

}and this is summary by Clauder AI:

James Powell’s impromptu talk at PyData London 2022 covers several key points about Python programming, particularly aimed at data scientists. Here are the main points he’s trying to convey:

- Python is more than just APIs and function calls: Many data scientists focus solely on memorizing APIs (like Pandas or NumPy) without truly understanding the language’s depth and richness.

- Generators are underutilized: Generators can be powerful tools, especially for simulations or non-closed form operations where each incremental step is computationally expensive.

- Lazy vs. Eager computation: Understanding when to use lazy or eager computation can significantly impact performance, especially in data science tasks.

- Functions should not be overused: There’s a tendency to create functions for any repeated code, but this isn’t always the best approach. Powell distinguishes between intentional and coincidental repetition.

- Avoid premature abstraction: Creating functions deep in libraries, especially for data loading, can lead to issues with testing and maintenance. Sometimes, ad-hoc behavior is preferable.

- Iteration helpers and intentionality: Tools like

enumerate,zip, and custom iteration helpers can make code more expressive and reveal the programmer’s intentions more clearly. - Understanding Python conventions: Looking beyond the surface-level functionality of Python’s standard library (e.g.,

itertools) can reveal patterns and conventions that lead to better code. - Code should express human intention: Loops in data science code often represent human-level processes rather than low-level computations. The code structure should reflect this.

- Flexibility in code structure: Allowing code to diverge when necessary, rather than forcing premature unification, can lead to more maintainable and adaptable codebases.

- Critical thinking about basic tools: Even when using basic Python constructs, data scientists should think deeply about why they’re using them and what alternatives might exist.

Overall, Powell encourages data scientists to look beyond superficial knowledge of Python and its libraries, urging them to understand the language more deeply and use its features more thoughtfully to write more intentional, maintainable, and expressive code.